How we trained a Neural Network to identify Room Types from listing photos with over 95% accuracy.

Image classification is an exploding field of research among Neural Network and AI scholars. With the advent of deep convolutional neural networks, "state-of-the-art" has been seeing a reinvention of itself multiple times per year most recently.

Here at StaySense, we're committed to building state-of-the-art technology and software that vacation rental managers can leverage to compete in a more meaningful way with the huge OTAs. As a company that also operates a growing list of distribution channels, building this technology is of crucial importance as we race to achieve a competitive growth curve.

Before we dive in to the results of what we built, lets briefly detour to explain the 'how' for those not super familiar with the subject matter.

What is a Convolutional Neural Network (CNN)?

In it's simplest form, a Convolutional Neural Network is a complex network graph with weights and biases meant to imitate the human brain (specifically the vision system). It attempts to replicate our brain's hyper-complex ability to use it's many millions of interconnected synapses to process input signals in real-time to correctily identify objects and spaces.

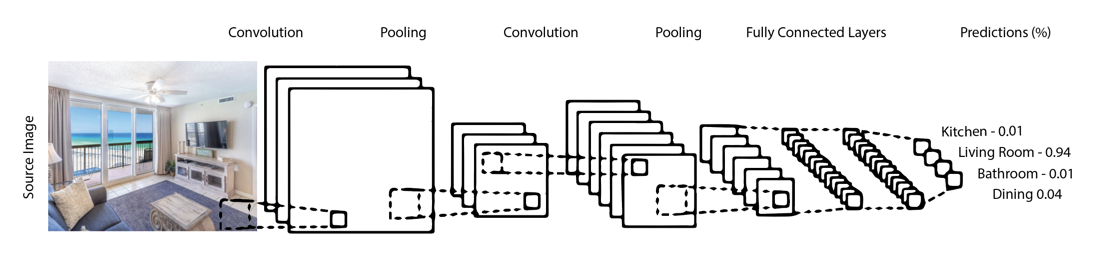

Here is a more traditional visualization of how the model works:

Starting with a sample image (a living room in a beach condo in this example), the image is processed through a complex series of graphs with various mathematical functions connecting each node to other nodes in the graph.

The goal of the network is to ultimately create a feature vector that is highly accurate at finding the most important features from an image to then accurately identify the class (room-type) it belongs to.

How we did it + Results

For our dataset, we collected a large number of images from various vacation rental listings across multiple websites, destinations, price ranges, camera orientations, photo qualities, and pixel densities. Next, each image was hand labeled by human intelligence based on their room type to create a labeled corpus of images for the task of training a Neural Network for this type of inference.

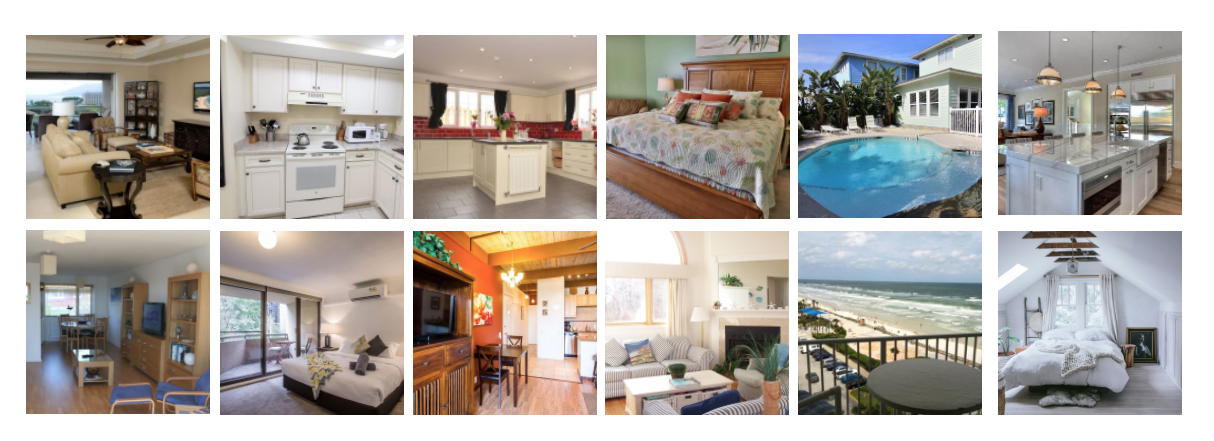

Some example images used in our data set to train our model

The next step was to start the training process and sit and wait. Complex machine learning models can take many hours to train, even when running training jobs on modern-day computationally powerful GPU machines.

After our model had finally trained, it was time for inference (prediction!) testing. Our initial results are super exciting - here are some highlights:

Our model is 98% confident this is a photo of a living room.

Even with terrible lighting, our model was also 99% confident this too was a photo of a living room:

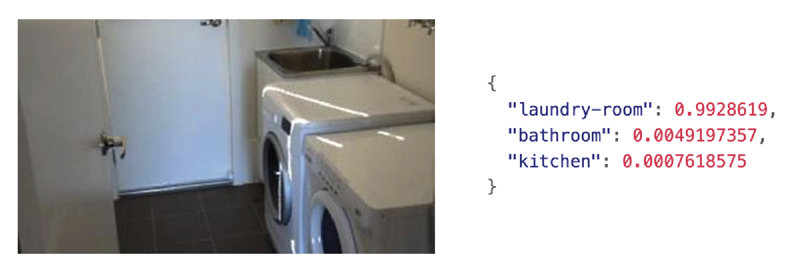

It works for laundry rooms too!

99.8% confidence that this is a laundry room

99% confidence this is also a laundry room

Some other various examples:

I like this example because of how poorly lit and staged the room is compared to the example above, yet the prediction is still accurate.

Notice how little context is here, yet the algorithm still nails it!

All-in-all, we're very pleased with initial results. We're most impressed with how well the model predicts the right label even when perspective and cropping are less than ideal.

Future Work

While our model is pretty good at predicting the room type of the photo when the photos have a high level of contextual specificity, what we've learned is that architectural photography can be fairly ambiguous. For example, consider this example - is this a photo of a dining room or a kitchen?

Our model is confused. It is 53% confident this photo is of a dining room, and 46% confident it's of a kitchen.

From a predictive standpoint, the model's response is no good here. 53% confidence as the greatest score from the model is not nearly enough to make decisions with.

However, what it reveals about the model is actually quite fascinating. The model, while having lower confidence in it's predictions, did correctly identify that this photo seems to be of both a dining room and a kitchen - pretty cool huh?

Consider this next example - is this a photo of a kitchen, dining room, or a living room?

Our model is 70% sure it's a kitchen, 23% sure it's a dining room, and 6% sure it's the living room.

Again, our model struggles to give us an exact answer in photos like this, but still has a lot of rich underlying context still figured out. We believe there are definitely specific ways to address these types of problems that are very prominent in our industry, but that will be for a future article!

Why this matters

For us, correctly labeling the 10's of thousand of images we ingest is the start of a longer data pipeline that will help us optimize image sort and serp photos for maximum engagement and bookings.

However, we firmly believe vision systems have a wide variety of use cases in our budding industry - many of which we likely have not considered yet.

This is just the first of many models we're planning to make publicly available - stay tuned!