An uncommon A/B testing approach for small teams in the Vacation Rental Industry

A/B testing in the Vacation Rental Industry is incredibly difficult.

If you set aside the question of “what do I test” and look at teams in the industry who already have the knowledge and expertise, there are two core problems remaining that really complicates the issue.

Issue #1: Meaningful Test Conundrum — Traffic

The First issue is — and perhaps most importantly — the reality that the vast majority of vacation rental brands simply do not have enough website traffic to run meaningful tests.

For example, I saw a conversation in an online forum recently where someone was talking about how frequently they help Property Managers (PMs) run split tests (I’m assuming they run an agency of some sort).

However, the example they gave highlighted the issue of traffic in a powerful way.

In their example, they talked about split testing the color of links/buttons on particular pages. While this approach/strategy has largely been seen as outdated since 2008–2009, it still raises the point well.

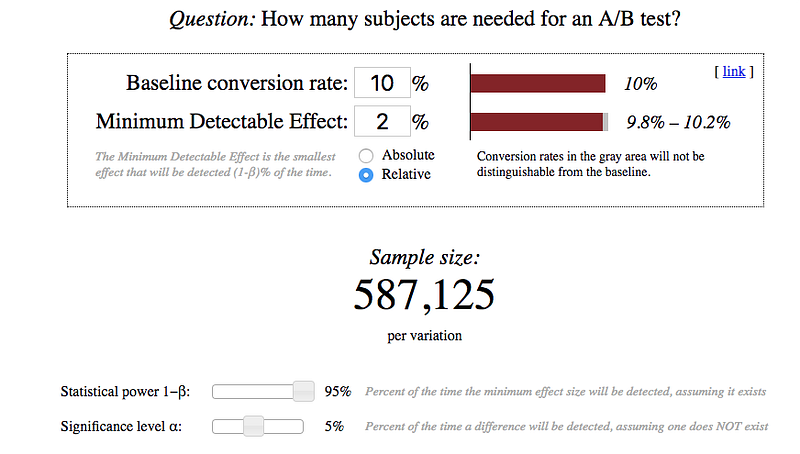

A button color on a website is likely going to have a very small impact to Click Through Rates (CTR). Assuming even a generous 2% relative increase in CTR, consider how many visitors you would need to have in each test group to get a meaningful result:

Being generous and assuming the button already has a 10% CTR and that by simply “changing the color” you’re going to see a 2% relative uplift (unlikely, but hey ¯\_(ツ)_/¯), to be 95% confident the impact was real, you would need a total of ~1.2 Million unique visitors to see your test **and** take an action.

The reality is that most vacation rental websites in our industry simply do not get 1.2 Million unique visitors annually, let alone that traffic volume to the specific page template you’re trying to run the test on.

Additionally — Even if a site got that kind of volume to the pages in say, 3–4 months time period, buying/shopping patterns in our industry are incredibly seasonal. That means, even if you could “test to significance” over a few months time, the results are almost definitely still inaccurate due to variance introduced by seasonality that is common in our industry.

Unless the changes being testing have huge (30–40%) uplift impacts, a lot of sites in our industry will simply never be able to run meaningful frequentist a/b style tests.

Issue #2: Small Teams and development budgets

The Second issue is that even if there are sites in our industry (which I do think there are a few) that have pages with enough traffic to run meaningful tests in a short enough timeline, a lot of companies in our space simply have really small teams.

This means that trying to come up with new template variations, functionality, layouts, etc. and deploying them as split tests can be an incredibly arduous task.

One that ultimately makes the whole experience of running tests seem nearly not worth it.

So what’s a Property Management company to do?

An Uncommon Solution

I say this is uncommon because while I have read a lot of people hinting at this idea in many ways, I haven’t personally found someone who put all of it together. I believe if executed well, it can help small teams still run meaningful tests as well as vetting ideas early and often.

First — Ugly Baby Syndrome

Be okay with “ugly baby” syndrome. What I mean is, be okay with shipping stuff out that you aren’t proud of… intentionally… on purpose…and with great commitment.

A lot of companies seem to take this approach:

- Have an idea to improve conversions.

- Spend lots of time and resources implementing the change.

- Spend lots of time and resources making sure it’s “perfect”.

- After lots of belaboring, finally decide it’s ready.

- Publish the test.

- Test fails.

- Company says Split Testing isn’t worth the effort.

While I would argue split-testing definitely requires a certain “stomach” for it in order to stick it out through the failures, it’s easy to understand how small brands would be even more likely to reject the split-testing culture when it takes so much work to get ideas to production that fail.

This is where you have to embrace ugly baby syndrome.

Second — Perfection is the enemy

Stop perfecting ideas. Iterating faster let’s you fail faster which lets you find winners faster.

What I mean, is take this approach:

- Have an idea to improve conversions.

- Spend the least amount of time possible implementing a hacky version that will give you some idea if people even want this new feature.

- Deploy to production.

- Learn.

That’s right. What I’m saying is, the best companies (as far as I can tell) with smaller teams aren’t implementing perfect functionality. They’re letting customers give them feedback as to whether or not it’s worth their time actually making/building the feature into something good.

Third — Be okay with non-conclusivity

Being okay with non-conclusive results is really important.

There are a lot of good tools out there (that are free) that will let you do some back of the napkin calculations to determine if there’s a good chance your tests are producing the results you think they might.

For example — Chi-Squared tests can, in general, give you a directionally accurate look at your data. I would not say, from a frequentist perspective, that it means your results are statistically significant at all.

What I’m advocating for is “good enough” testing to try to learn early from as little effort as possible whether the conversion idea has any merit at all.

Then if it looks like there is some uplift, go back, put the work into making it great, and launching it again.

This process will make it much easier to push a large funnel of ideas through the site quickly.

Fourth — Understand the risks

Probably not. At least not significantly in most cases.

Most tests you will run are likely going to have minimal up-lift. This means that if you are taking this approach, the potential downside is also pretty small.

This is completely rooted in the “move fast, break stuff, learn fast” mentality.

It should allow you to get a lot of incremental potential wins, that over time, will help you see macro conversion lifts across the site (which you could confirm by looking at Analytics data over the same time period (save for seasonality)).

One final practical example

We once launched a button on our site that did absolutely nothing.

You read that correctly.

We have millions of annual visitors, which for most pages means we can run meaningful tests in reasonable timelines.

BUT. We’re also a small team, which means we have the same time constraints as everyone else.

We had an idea for generating User Generated Content (UGC) that, to implement, would require a lot of development work after someone clicked the button. E.g. Interfaces, uploads, code, database reads/writes, etc.

So we literally launched a button that did absolutely nothing.

We watched the data to see what % of users would click it to give us an idea of a) demand for the feature b) potential volume of users who would go through the whole process and c) validate whether it made sense to invest the effort to actual develop the feature.

The results?

Not as strong as we hoped. Still good enough that we decided to to launch “something”, but not something as “full-bore” with all the bells and whistles we had originally planned.

We would have felt way worse if we had developed this “awesome new feature” that took lots of hours and excitement only to have it deliver lackluster results.

This way we let visitors give us feedback in real-time what amount of effort we should invest in this.

Wrapping Up

I wholly believe all companies of all sizes should be split testing as much as possible. Chris Stucchio has written extensively that “bad testing” is likely still better than a coin toss.

Obviously, I think testing should be done well/right/thoughtfully, but I want to push more people towards viable approaches that can help small teams find micro-improvements early, often, and without wasting a lot of effort.

You may not be able to prove true statistical significance with this approach, but I firmly believe if done well, you will definitely find a lot of small wins that have at least better odds than a coin toss of actually providing improvement and value to your sites over the long haul.